Abstract

In computational pathology, whole slide image (WSI) classification presents a formidable challenge due to its gigapixel resolution and limited fine-grained annotations. Multiple instance learning (MIL) offers a weakly supervised solution, yet refining instance-level information from bag-level labels remains complex. While most of the conventional MIL methods use attention scores to estimate instance importance scores (IIS) which contribute to the prediction of the slide labels, these often lead to skewed attention distributions and inaccuracies in identifying crucial instances . To address these issues, we propose a new approach inspired by cooperative game theory: employing Shapley values to assess each instance’s contribution , thereby improving IIS estimation. The computation of the Shapley value is then accelerated using attention, meanwhile retaining the enhanced instance identification and prioritization . We further introduce a framework for the progressive assignment of pseudo bags based on estimated IIS, encouraging more balanced attention distributions in MIL models. Our extensive experiments on CAMELYON-16, BRACS, and TCGA-LUNG datasets show our method’s superiority over existing state-of-the-art approaches, offering enhanced interpretability and class-wise insights. Our source code is available at https://github.com/RenaoYan/PMIL.

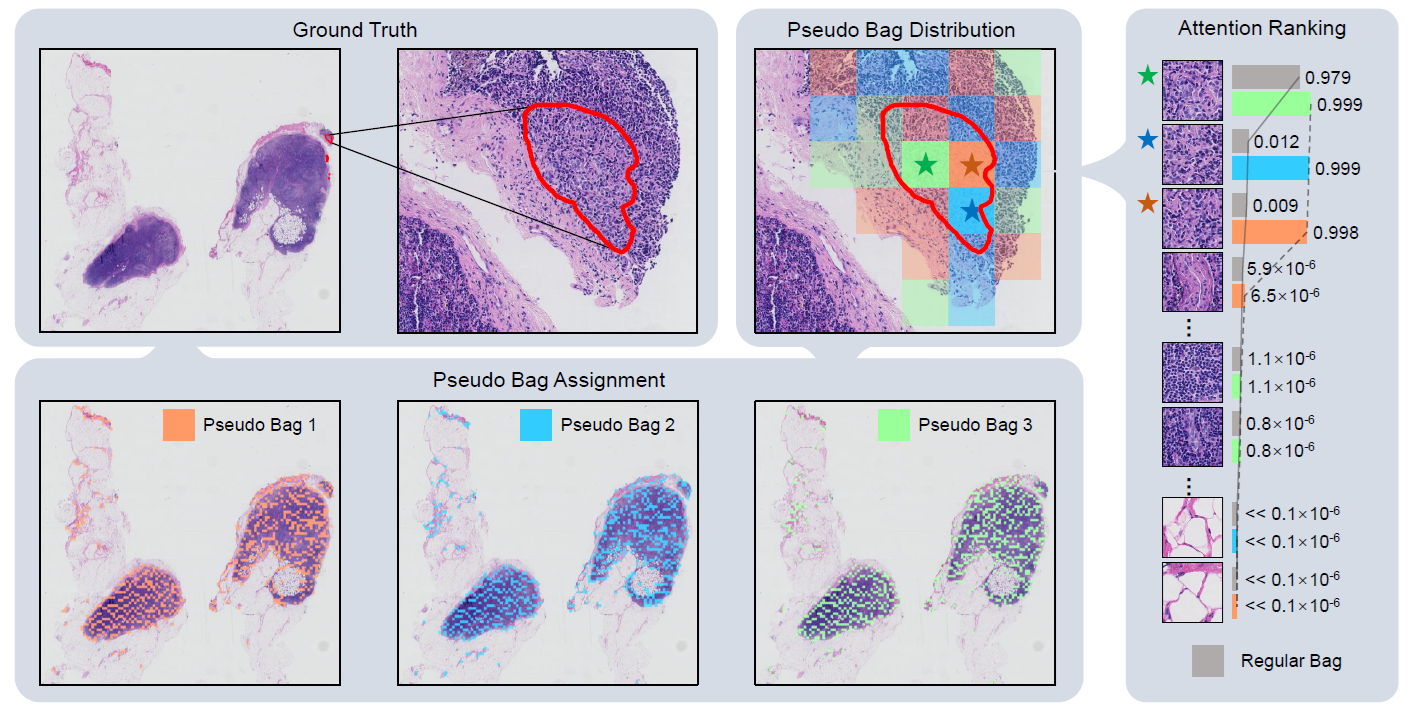

Schematic Diagram of the Proposed Framework

Under the guidance of IIS estimated by Shapley values instead of attention scores used in the existing architectures, we propose a progressive pseudo bag augmented MIL framework termed PMIL. We incorporate the expectation-maximization (EM) algorithm to obtain optimal pseudo bag label assignment. Specifically, the parameter θ of the MIL model can be learned via our proposed pseudo bag augmentation for MIL as the M-step, and the minimization problem for ε is translated into an assignment optimization for pseudo bags via minimizing the KL divergence of the predictions between the pseudo bags and the original bags as the E-step. As illustrated in the Figure, this iterative optimization is implemented within n training rounds.

For the technical detail, please refer to the original paper.

Downloads

Full Paper: click here

PyTorch Code: click here

Reference

@article{yan2024shapley,

title={Shapley values-enabled progressive pseudo bag augmentation for whole-slide image classification},

author={Yan, Renao and Sun, Qiehe and Jin, Cheng and Liu, Yiqing and He, Yonghong and Guan, Tian and Chen, Hao},

journal={IEEE Transactions on Medical Imaging},

year={2025},

volume={44},

number={1},

pages={588-597},

publisher={IEEE},

doi={10.1109/TMI.2024.3453386}

}